Data Engineer Course in Visakhapatnam | Of course. Here is a full, detailed explanation of a Data Engineering Course, covering its purpose, target audience, core curriculum, and the distinct role it plays in the data ecosystem.

What is a Data Engineering Course?

Data Engineer Course in Visakhapatnam | A Data Engineering course is a specialized training program that teaches the skills to design, build, and manage the foundational “plumbing” and “infrastructure” for data. While Data Analysts and Scientists consume data to find insights, Data Engineers are responsible for constructing the robust, scalable, and reliable systems that produce the data in a usable form.

They are the architects and builders of the data world.

Who is this Course For?

- Software Developers & Engineers: Looking to specialize in data-intensive systems and distributed computing.

- Data Analysts & Scientists: Who want to move “upstream” to build the data pipelines they wish they had.

- IT Professionals & Database Administrators (DBAs): Transitioning to cloud-based, large-scale data systems.

- Computer Science/Engineering Graduates: Interested in building high-performance, scalable systems.

- Career Changers with a strong technical background and an interest in big data.

Core Components & Syllabus (Full Details)

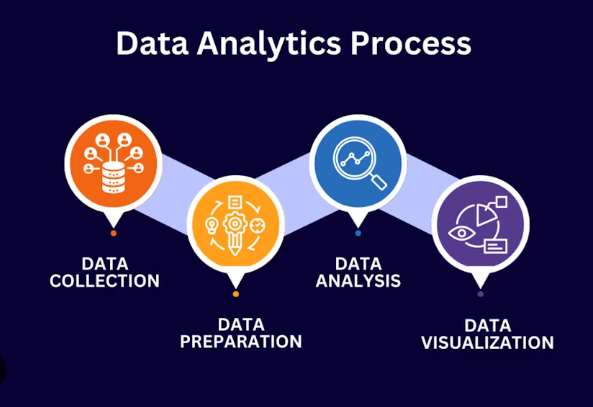

A comprehensive Data Engineering course is structured around the modern data stack and the data lifecycle, from ingestion to consumption. Best Data Engineer Course in Visakhapatnam | The following chart illustrates this architecture and the key technologies involved at each stage:

Part 1: Foundational Programming & Databases

- Advanced Programming:

- Python: Deep focus, especially for data manipulation (Pandas) and scripting.

- SQL: The most important language for a Data Engineer. Mastery of complex queries, query optimization, and window functions is non-negotiable.

- Scala/Java: Often required for big data frameworks like Apache Spark.

- Database Fundamentals:

- Relational Databases (OLTP): PostgreSQL, MySQL. Understanding schema design, normalization, and ACID properties.

- NoSQL Databases: Key-Value (Redis), Document (MongoDB), Wide-Column (Cassandra). Understanding their use cases.

Part 2: The Big Data Ecosystem & Distributed Systems

- Core Concepts: Understanding distributed computing, parallel processing, and cluster management.

- Apache Hadoop (The Foundation): Understanding the HDFS (storage) and MapReduce (processing) paradigm.

- Apache Spark (The Workhorse): The dominant framework for large-scale data processing.

- Spark Core & Architecture: RDDs, Drivers, and Executors.

- Spark SQL & DataFrames: For structured data processing.

- Spark Streaming: For processing real-time data streams.

Part 3: Data Pipeline Development & Orchestration

This is the core responsibility of a Data Engineer.

- ETL vs. ELT: Understanding the modern paradigm of Extract, Load, then Transform.

- Data Ingestion (Extract):

- Batch Ingestion: Sqoop, custom scripts.

- Stream Ingestion: Apache Kafka or AWS Kinesis for handling real-time data streams.

- Workflow Orchestration: Automating and monitoring data pipelines. Mastery of tools like:

- Apache Airflow: The industry standard for defining, scheduling, and monitoring workflows as code.

- Prefect, Dagster.

Part 4: Cloud Data Platforms & Architecture

Modern data engineering is cloud-native.

- Core Cloud Concepts: IaaS, PaaS, SaaS. Focus on data services.

- Specialization in one major cloud provider:

- AWS: S3, Redshift, Glue, EMR, Kinesis, Lambda.

- Azure: Azure Data Lake Storage, Synapse Analytics, Data Factory, Databricks.

- GCP: Cloud Storage, BigQuery, Dataflow, Dataproc.

- Data Warehousing: Top Data Engineer Course in Visakhapatnam | Designing and managing cloud data warehouses like Snowflake, BigQuery, and Redshift.

- Data Lake & Lakehouse Architecture: Building and managing centralized repositories for structured and unstructured data. Understanding file formats (Parquet, ORC, Avro).

Part 5: DataOps, DevOps & Productionalization

- Infrastructure as Code (IaC): Using Terraform or CloudFormation to provision cloud resources.

- CI/CD for Data Pipelines: Automating testing and deployment of data code.

- Data Quality & Testing: Implementing checks and monitoring to ensure reliable data (using tools like Great Expectations, dbt tests).

- Data Governance & Cataloging: Managing data lineage, security, and discoverability.

Key Features & Teaching Methodology

- Heavy Focus on Distributed Systems: Understanding why and how systems scale.

- Hands-On with Cloud Platforms: Significant portion of the course is spent using AWS, Azure, or GCP with real credits.

- Project-Centric: Building an end-to-end data pipeline that ingests, processes, and serves data.

- Tool & Technology Deep Dives: Going beyond the basics of tools like Spark, Airflow, and Kafka.

Benefits of Learning Data Engineering

- Extremely High Demand & Low Supply: One of the most in-demand and well-compensated tech roles.

- Foundation of AI/ML: The “AI boom” is entirely dependent on robust data engineering.

- Technical Depth & Challenge: Work on complex, large-scale distributed systems problems.

- Strategic Impact: Enable the entire data-driven decision-making process of a company.

Challenges & Important Considerations

- Steep Learning Curve: Requires knowledge of software engineering, distributed systems, and data systems.

- Rapidly Evolving Landscape: New tools and cloud services emerge constantly.

- On-Call & Operational Burden: Responsible for the health of critical data infrastructure, which can lead to off-hours work.

- Complexity of Debugging: Debugging a distributed data pipeline is significantly more complex than debugging a single application.

What to Look for in a Good Data Engineering Course

- Comprehensive & Modern Curriculum: Must cover Spark, Kafka, a Cloud Platform, Airflow, and SQL in depth.

- Hands-On Cloud Labs: Must provide access to AWS, Azure, or GCP to build real pipelines.

- Capstone Project: A non-negotiable requirement to build a portfolio piece.

- Focus on Fundamentals: Not just tools, but the underlying concepts of distributed systems and data modeling.

- Industry-Experienced Instructors: Teachers must have built data pipelines in a production environment.

Career Paths After a Data Engineering Course

- Data Engineer

- Big Data Engineer

- Cloud Data Engineer

- MLOps Engineer

- Data Architect

- Analytics Engineer

Conclusion

A Data Engineering course is a demanding but incredibly rewarding investment. It prepares you for a career as a foundational builder in the digital economy. Best Data Engineer Course in Visakhapatnam | Data Engineers construct the highways on which all data travels; without them, the modern data science and analytics machine grinds to a halt. If you enjoy software engineering, solving complex infrastructure problems, and working with massive scale, data engineering is a premier career path.

We talk a lot about how companies are becoming “data-driven.” It’s one of those things that looks good in presentations and strategy meetings. Top Data Engineer Course in Visakhapatnam | With dashboards lighting up in real-time, predictive models guiding decision-making, and analytics tools showing exactly what’s working and what’s not, everything seems impressive on the surface.

Those front-end visuals steal the spotlight. They’re exciting, they feel powerful!

Yet, behind all of it, something much quieter is going on.

The Invisible Engine Room

There’s a team whose work never appears in reports or dashboards. Their names might not be attached to insights, and most people don’t know their tools or processes.

Still, they’re the reason those insights even exist.

Data engineers work in the background, quietly solving problems most people never realise were there. They build and maintain Data Engineer Course in Visakhapatnam| systems that move data from one source to another, clean it up, make sure it’s usable, and prepare it so analysts and scientists can work their magic.

Every decision made by data begins with a solid technical foundation, and they build that foundation every single day.

It’s Not Just Pipelines

To someone outside the field, it might sound simple. Move data, store it somewhere, let people use it. The reality is nothing close to that.

Data engineers spend time structuring workflows, building scalable infrastructure, integrating APIs that never behave the same way twice, and managing data from tools that were never meant to work together. They think long-term while putting out daily fires. They ensure systems don’t break when business needs change suddenly or when traffic doubles without warning.

There is a layer of thoughtfulness behind every working data flow. Without that structure, dashboards wouldn’t just be blank. They’d be pointing in the wrong direction.

Complexity Behind the Scenes

Most users won’t know about the broken jobs at 2 AM, the schema changes no one warned about, or the flawed data that would’ve caused big problems if someone hadn’t caught it early.

Data engineers don’t usually chase the spotlight. Their work isn’t performative. They measure success in what doesn’t go wrong. In systems that just run. Best Data Engineer Course Near Me | In the quiet confidence that their pipelines are solid, even when the business shifts directions overnight.

While data scientists often ask what can be done with available information, data engineers ask whether that information is even ready, clean, reliable and up-to-date. The questions might not sound glamorous, but they’re essential to making anything work.